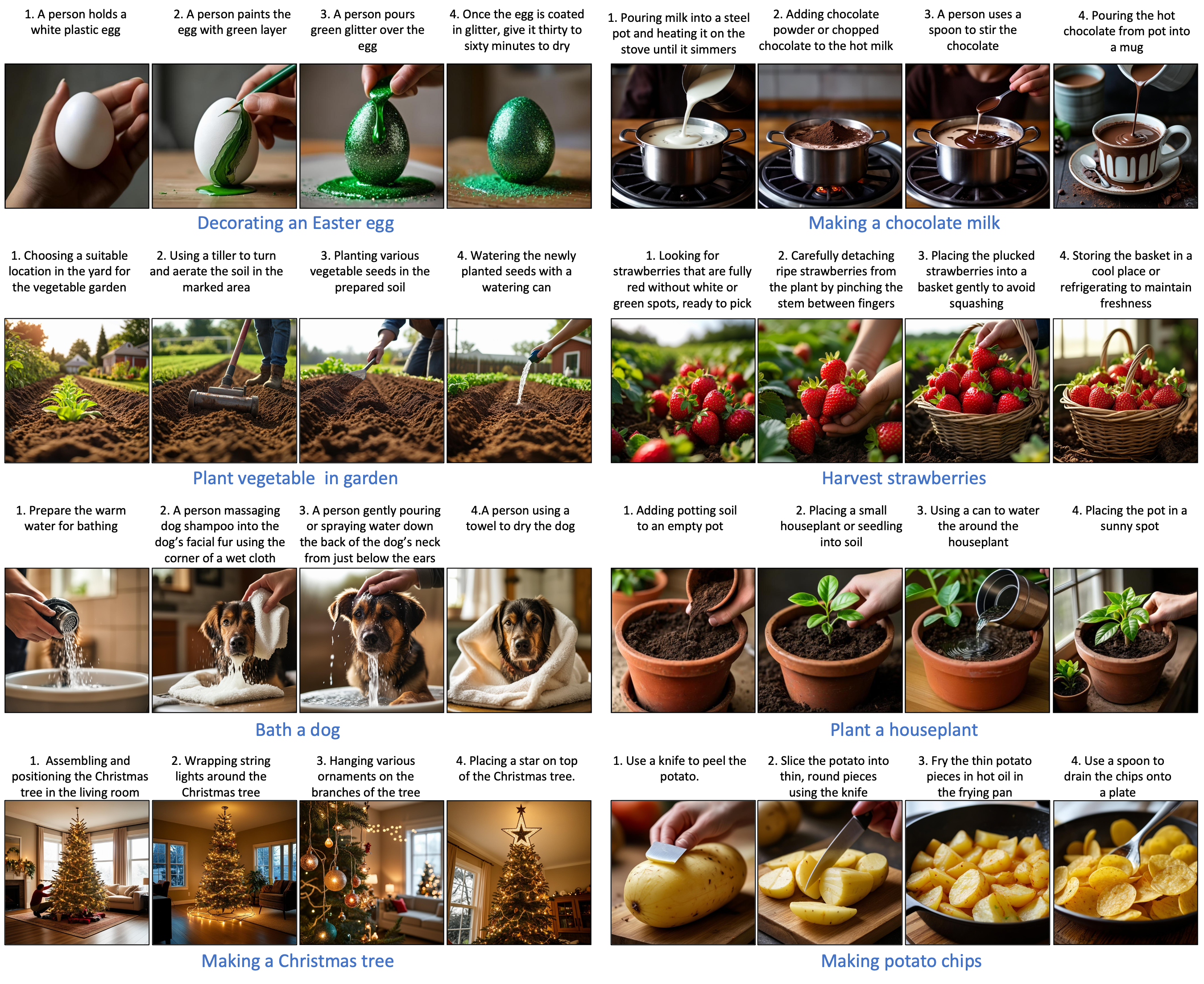

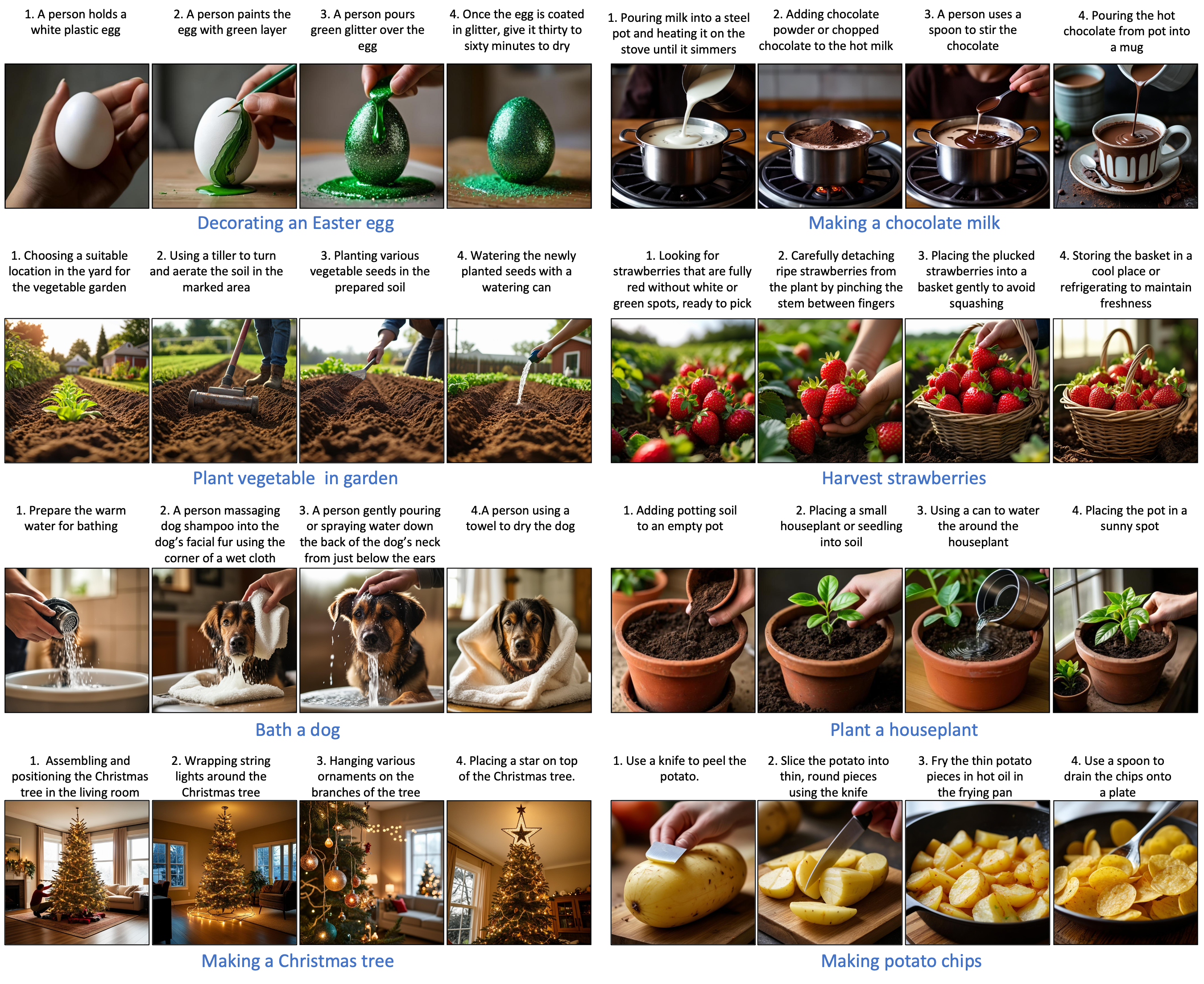

Visual instruction generation

Step 1

Step 2

Step 3

Step 4

| 1.0 | 1.0 | 1.0 | 1.0 |

| 1.0 | 1.0 | 1.0 | 1.0 |

| 1.0 | 1.0 | 1.0 | 1.0 |

| 1.0 | 1.0 | 1.0 | 1.0 |

Step 1

Step 2

Step 3

Step 4

Step 1

Step 2

Step 3

Step 4

Step 1

Step 2

Step 3

Step 4

Step 1

Step 2

Step 3

Step 4